An Easy Way to Create Verifiable Claims on Ceramic

Each document written to Ceramic in the form of a Verifiable Credential or off-chain EAS Attestation payload contains everything needed to later recall the document from Ceramic and validate the signatures.

We’ve discussed in our Attestations vs. Credentials blog and Verifiable Credentials guide how to make two claim standards “work together” on ComposeDB by improving querying efficiency and composability as a result of integrating interfaces. Taking a step back, one of the core benefits these formats unlock for developers is the tamper-evident verification assurance that the resulting signature payloads provide. More broadly, developers (and by extension the users of their applications) who want to use verifiable claims in their applications value claim portability.

Why does claim portability matter?

Unlike a public blockchain that reveals the behavior of a user address simply by observing its transaction history, claims produced in 'off-chain' environments require different qualities to be inherently 'provable'. At the same time, many developers agree that for certain types of data, or certain application environments, a fully on-chain architecture is both cost-inefficient and non-performant. As a result, some of the systems we’re seeing built on Ceramic today use standards like W3C Verifiable Credentials or EAS Offchain Attestations to provide portability assurances.

Each document written to Ceramic in the form of a Verifiable Credential or off-chain EAS Attestation payload contains everything needed to later recall the document from Ceramic and validate the signatures. The document can therefore be considered “whole” and simply requires minor reconstruction (to create a Verifiable Presentation in the case of a VC, for example).

So, what’s the issue?

In the Attestations vs. Credentials post, we used a simple VC definition that is simply meant to illustrate a trust credential issued from one account that points to another. The credential subject therefore only contained two fields:

isTrusted: a boolean field that represents whether the party is trusted or notid: the DID of the recipient account

Despite the brevity of this credential type, the payload of one example EIP712 instance was quite verbose:

{

"issuer": "did:pkh:eip155:1:0x06801184306b5eb8162497b8093395c1dfd2e8d8",

"@context": [

"https://www.w3.org/2018/credentials/v1",

"https://beta.api.schemas.serto.id/v1/public/trusted-reviewer/1.0/ld-context.json"

],

"type": [

"VerifiableCredential",

"Trusted"

],

"credentialSchema": {

"id": "https://beta.api.schemas.serto.id/v1/public/trusted/1.0/json-schema.json",

"type": "JsonSchemaValidator2018"

},

"credentialSubject": {

"isTrusted": true,

"id": "did:pkh:eip155:1:0xcc2158d7e1b0fffd4db6f51e35f05e00d8fe30b2"

},

"issuanceDate": "2023-12-05T21:03:03.061Z",

"proof": {

"verificationMethod": "did:pkh:eip155:1:0x06801184306b5eb8162497b8093395c1dfd2e8d8",

"created": "2023-12-05T21:03:03.061Z",

"proofPurpose": "assertionMethod",

"type": "EthereumEip712Signature2021",

"proofValue": "0x47fadf4bab9c0d111b6bf304eb2c72e6419c636f7b117761ce5cf4926a79074e073e2560b90d78230deac06a7afc705813f3f403fa51967e2da0e7783d4dae0d1b",

"eip712": {

"domain": {

"chainId": 1,

"name": "VerifiableCredential",

"version": "1"

},

"types": {

"EIP712Domain": [

{

"name": "name",

"type": "string"

},

{

"name": "version",

"type": "string"

},

{

"name": "chainId",

"type": "uint256"

}

],

"CredentialSchema": [

{

"name": "id",

"type": "string"

},

{

"name": "type",

"type": "string"

}

],

"CredentialSubject": [

{

"name": "id",

"type": "string"

},

{

"name": "isTrusted",

"type": "bool"

}

],

"Proof": [

{

"name": "created",

"type": "string"

},

{

"name": "proofPurpose",

"type": "string"

},

{

"name": "type",

"type": "string"

},

{

"name": "verificationMethod",

"type": "string"

}

],

"VerifiableCredential": [

{

"name": "@context",

"type": "string[]"

},

{

"name": "credentialSchema",

"type": "CredentialSchema"

},

{

"name": "credentialSubject",

"type": "CredentialSubject"

},

{

"name": "issuanceDate",

"type": "string"

},

{

"name": "issuer",

"type": "string"

},

{

"name": "proof",

"type": "Proof"

},

{

"name": "type",

"type": "string[]"

}

]

},

"primaryType": "VerifiableCredential"

}

}

}As a result, the schemas used to capture and store the VCs required several layers of interfaces with many subfields. Additionally, as mentioned before, the data itself would still require a process of normalization to create a presentation to be verified (for example the context key used in the ComposeDB schema would need to be replaced with @context).

This example also requires the end user to sign a transaction in their browser wallet each time a Verifiable Credential is issued, regardless of whether they wish to use a JWS or EIP-712 proof type. You can imagine how this would become cumbersome to a user who wants to issue several in one sitting.

The entirety of this setup seems like overkill for an assertion that uses such a simple data model. While it may be a necessity for architectures that require the W3C Verifiable Credential standard, those that don’t can ensure claim portability more simply. What if we instead leverage Ceramic’s core capabilities to more seamlessly design an architecture that accomplishes verifiable claims?

Example Application: Walk-Through

To illustrate how to do this, we’ve put together the following repository that this section of the article will reference:

https://github.com/ceramicstudio/did-session-claimsBefore we begin to boot up a local deployment of our application, let’s talk through how our user authentication is configured, and how this relates to the portability of the claims we’ll produce.

Authentication

This example uses WalletConnect’s Web3Modal module to pass authentication logic down through the child components of this application (thus making it possible for us to use for Ceramic authentication). If you navigate into /src/pages/_app.tsx, you can see how our Wagmi configuration encompasses our ComposeDB wrapper, both of which encompass any React components we’ll render to our client.

Let’s take a deeper look at the ComposeDB wrapper imported and used at the top level of our application. If you open up /src/fragments/index.tsx, you’ll notice how we’re using a React createContext hook to make our ComposeDB client (with our casted runtime definition), an isAuthenticated value, and a keySession object available to any child components that import and call the exported useComposeDB method.

Take a look at what’s happening in the useEffect hook within the ComposeDB parent object:

export const ComposeDB = ({ children }: ComposeDBProps) => {

function StartAuth(isAuthenticated: boolean = false) {

const { data: walletClient, isError, isLoading } = useWalletClient();

const [isAuth, setAuth] = useState(false);

useEffect(() => {

async function authenticate(

walletClient: GetWalletClientResult | undefined

) {

if (walletClient ) {

const accountId = await getAccountId(walletClient, walletClient.account.address)

console.log(walletClient.account.address, accountId)

const authMethod = await EthereumWebAuth.getAuthMethod(walletClient, accountId)

// create session

const session = await DIDSession.get(accountId, authMethod, { resources: compose.resources })

//set DID on Ceramic client

await ceramic.setDID(session.did)

await session.did.authenticate();

console.log("Auth'd:", session.did.id);

localStorage.setItem("did", session.did.id);

keySession = session;

setAuth(true);

}

}

authenticate(walletClient);

}, [walletClient]);

return isAuth;

}

if (!isAuthenticated) {

isAuthenticated = StartAuth();

}

return (

<Context.Provider value={{ compose, isAuthenticated, keySession }}>

{children}

</Context.Provider>

);

};Since we know our application will be using a wallet client (given our use of Web3Modal), we’ll rely on Wagmi’s useWalletClient method to obtain an account ID and authentication method that we’ll later use as arguments to create a DID session. In effect, the result creates and authorizes a DID session key for the user, and authorizes that session key with capabilities to write only across the specific data models references in the {resources: compose.resources} object argument. This is the predominant way blockchain accounts can use Sign-In with Ethereum and CACAO for authorization (yielding a parent did:pkh that authorizes child session keys with limited and temporary capabilities).

Finally, you’ll see how our keySession variable is redefined as the current authenticated session, thus exposing it to any React components that import useComposeDB.

DID Class Capabilities

If you take a deeper look at the DID Class, you’ll notice a createJWS method available to use that creates a JWS-encoded signature over a specified payload which is signed by the currently authenticated DID. This is what we’ll use to create an explicit JWS-encoded signature over our “Trust Credential” payloads, allowing us to easily determine who signed the payload, and determine if the credential has been altered.

Data Models

If you navigate into the /composites directory, you’ll find the schema definition we’ll use to store this simple assertion type:

## our broadest claim type

interface VerifiableClaim

@createModel(description: "A verifiable claim interface") {

controller: DID! @documentAccount

recipient: DID! @accountReference

}

type Trust implements VerifiableClaim

@createModel(accountRelation: LIST, description: "A trust credential") {

controller: DID! @documentAccount

recipient: DID! @accountReference

trusted: Boolean!

jwt: String! @string(maxLength: 100000)

}You’ll immediately notice that this definition is much more concise compared to the definitions we used for our Verifiable Credential or Attestation versions of the account trust credential. The recipient and trusted fields will be used to store the end values our users write to Ceramic, while a jwt field will hold our portable claim (which will be redundant against the plain values we’re writing in the two fields above it, but will provide the tamper-evident and portable qualities we’ll want).

Writing Our Credentials

If you imagine our Trust model without the jwt subfield, a write query to our ComposeDB client might look something like this:

const data = await compose.executeQuery(`

mutation{

createTrust(input: {

content: {

recipient: "${completeCredential.recipient}"

trusted: ${completeCredential.trusted}

}

})

{

document{

id

recipient{

id

}

trusted

}

}

}

`);In our case, if you take a look into the /src/components/Claim.tsx component, you’ll notice how the saveBaseCredential method uses a few additional steps to create a JWT string prior to saving to Ceramic:

const saveBaseCredential = async () => {

const credential = {

recipient: `did:pkh:eip155:1:${destination.toLowerCase()}`,

trusted: true,

};

if (keySession) {

const jws = await keySession.did.createJWS(credential);

const jwsJsonStr = JSON.stringify(jws);

const jwsJsonB64 = Buffer.from(jwsJsonStr).toString("base64");

const completeCredential = {

...credential,

jwt: jwsJsonB64,

};

const data = await compose.executeQuery(`

mutation{

createTrust(input: {

content: {

recipient: "${completeCredential.recipient}"

trusted: ${completeCredential.trusted}

jwt: "${completeCredential.jwt}"

}

})

{

document{

id

recipient{

id

}

trusted

jwt

}

}

}

`);

}

};First, notice how we define a credential object that includes only the essential values each assertion needs (the recipient, represented by their did:pkh, and the trusted value). Next, we’re using the createJWS method (mentioned previously) from our authenticated DID session (represented by the keySession yielded by the useComposeDB deconstruction at the top of the component). Finally, we Base64-encode the result and save it to Ceramic.

Notice how our credential doesn’t hard-code a controller (i.e. the account creating the assertion). As we’ll display later, the DID that was used to create the JWS can be easily extracted from the payload (along with the assertion values) and is therefore inherently included.

Getting Started

To begin, start by cloning the example app repository and installing your dependencies:

git clone https://github.com/ceramicstudio/did-session-claims && cd did-session-claims

npm installWe’ve set up a script for you in /scripts/commands.mjs to automate the creation of your ComposeDB server configuration and admin credentials. You’ll use it in the next step by running the following command in your terminal:

npm run generateIf you take a look at your admin_seed.txt and composedb.config.json files, you’ll now see that an admin seed and server configuration have been generated for you. Taking a closer look at your server configuration, you’ll notice the following key-value pair within the JSON object:

"ipfs": { "mode": "remote", "host": "http://localhost:5001" }Unlike several of our other tutorials where we run IPFS in “bundled” mode, we’ll need our IPFS instance running separately for this walk-through so we can show how to obtain the session key from the DAG node from IPFS (to prove that the session key used to create the Ceramic commit is the same as the session key that invoked the createJWS method referenced above).

We’ll want to start our IPFS daemon next (with pubsub enabled):

ipfs daemon --enable-pubsub-experiment In a separate terminal, allow CORS (to enable our frontend to access data from the Kubo RPC API):

ipfs config --json API.HTTPHeaders.Access-Control-Allow-Origin '["'"$origin"'", "http://localhost:8080","http://localhost:3000"]'Finally, start your application:

nvm use 20

npm run devThis command will invoke the script created for you in /scripts/run.mjs responsible for deploying our ComposeDB schema on your local node, booting up a GraphiQL instance on port 5005, and starting your NextJS interface on port 3000.

Creating Assertions

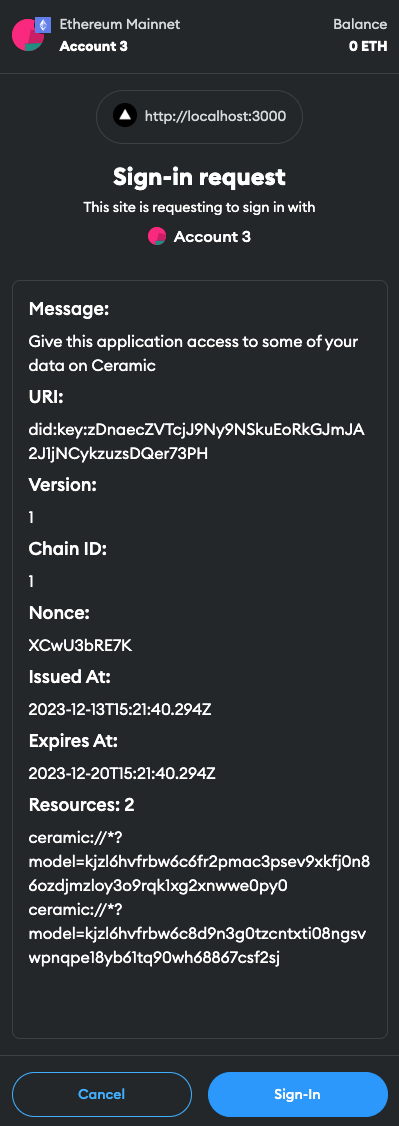

If you navigate to http://localhost:3000 in your browser, you’ll be able to authenticate yourself by clicking the “Connect Wallet” button in the navigation (using Web3Modal). You’ll also be prompted with a second signature request - this one is from the /src/fragments/index.tsx context we discussed earlier, which creates the DID session, with limited scope to write data only against the VerifiableClaim interface and Trust types (shown in “resources” below):

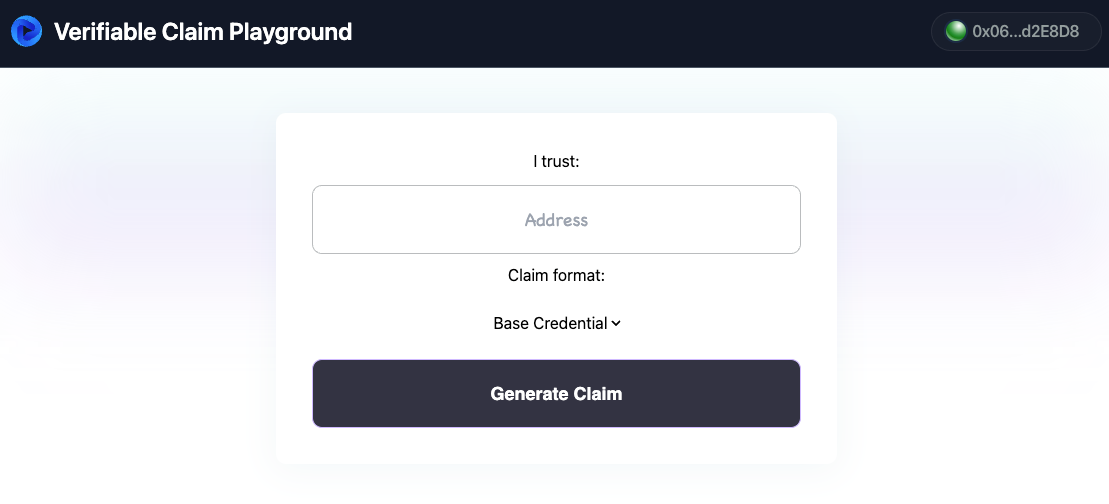

You should now see the following in your browser:

As mentioned above, the corresponding React component can be found at /src/components/Claim.tsx. We’ve added some logs into the code for you, so we recommend inspecting your console in your browser as you follow along.

Go ahead and enter a dummy wallet address into the Address input field, and click “Generate Claim.” You’ll notice several new logs in your browser console - we’ll walk through these as we explain what’s happening.

Above we spoke about how we’re using the saveBaseCredential method to use our authenticated session key to sign our credential and save it to Ceramic using a mutation query off of our ComposeDB client. In your text editor, you’ll also see that we’re invoking validateBaseCredential at the end of this call.

Let’s take a look at what’s happening here:

const validateBaseCredential = async () => {

const credential: any = await compose.executeQuery(

`query {

trustIndex(last: 1){

edges{

node{

recipient{

id

}

controller {

id

}

trusted

jwt

id

}

}

}

}`

);

console.log(credential.data)

if (credential.data.trustIndex.edges.length > 0) {

//obtain did:key used to sign the credential

const credentialToValidate = credential.data.trustIndex.edges[0].node.jwt;

const json = Buffer.from(credentialToValidate, "base64").toString();

const parsed = JSON.parse(json);

console.log(parsed);

const newDid = new DID({ resolver: KeyResolver.getResolver() });

const result = await newDid.verifyJWS(parsed);

const didFromJwt = result.didResolutionResult?.didDocument?.id;

console.log('This is the payload: ', result.payload);

//obtain did:key used to authorize the did-session

const stream = credential.data.trustIndex.edges[0].node.id;

const ceramic = new CeramicClient("http://localhost:7007");

const streamData = await ceramic.loadStreamCommits(stream);

const cid: CID | undefined = streamData[0] as CID;

const cidString = cid?.cid;

//obtain DAG node from IPFS

const url = `http://localhost:5001/api/v0/dag/get?arg=${cidString}&output-codec=dag-json`;

const data = await fetch(url, {

method: "POST",

headers: {

"Content-Type": "application/json",

},

});

const toJson: DagJWS = await data.json();

const res = await newDid.verifyJWS(toJson);

const didFromDag = res.didResolutionResult?.didDocument?.id;

console.log(didFromJwt, didFromDag);

if (didFromJwt === didFromDag) {

console.log(

"Valid: " +

didFromJwt +

" signed the JWT, and has a DID parent of: " +

credential.data.trustIndex.edges[0].node.controller.id

);

} else {

console.log("Invalid");

}

}

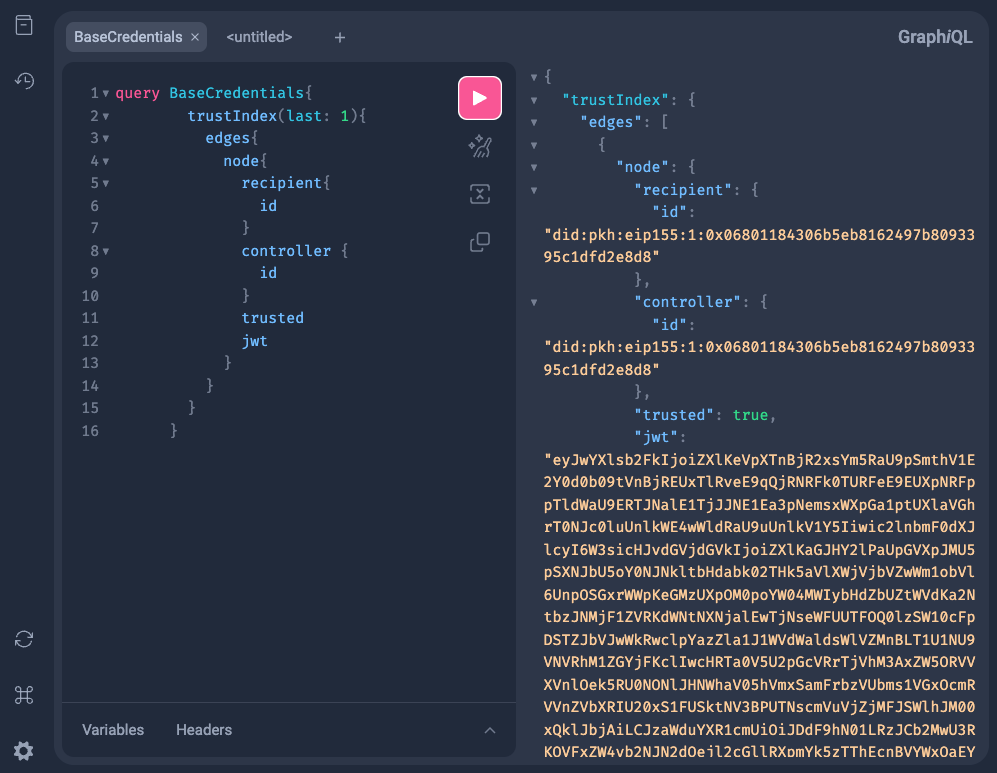

};First, we’re grabbing the most recent Trust model instance from our index (the one we just created). After we’ve extracted the value of the jwt field from this node and converted it back to a JSON object, notice how we eventually are able to extract the result of the id subfield after verifying the signature (which we subsequently assign to didFromJwt. This is the did:key that was used to sign the JWT.

Next, for the sake of proving ownership back to the parent did:pkh account, we’ll show how to obtain the did:key from the DAG node that was used to sign the Ceramic commit. After loading the stream commits based on the node’s StreamID and isolating the IPFS CID, we’re making a fetch request to our remote IPFS node running on port 5001, asking for the DAG node that’s tied to the Ceramic commit (for more information on the various API options, visit Kubo RPC API).

Similar to how we extracted the did:key from our jwt subfield, we can use the JSON result to verify the signature, and isolate the id field from the DID document. Finally, we log “Valid” after proving that the two DIDs are the same, proving that the did:key used to generate the portable JWS over the data is indeed the same as the controller of the Ceramic stream.

Finally, just for fun, we’ve set up an embedded GraphiQL instance on the same page for you - go ahead and submit the default query to view an instance result:

What have we learned?

Different teams will have different requirements related to verifiable claims. For some, the use of a standard like a W3C Verifiable Credential will be a requirement. Other teams might care more about ensuring claim portability which offers an easy way to tie signed data to a signer in a reliable way.

It’s also likely obvious to some readers that the logic we’ve set up to create a credential object and use the did:key to sign is not happening at the Ceramic protocol level but at the application level, which could be maliciously leveraged by applications to cause their users to generate falsy assertions (though the same could be said about the temporary write privileges a user grants to a certain set of ComposeDB model definitions when they sign and create their DID session).

At the very least, we hope you walk away from this article with a better understanding of how DID sessions work, how they’re created, and where the DAG nodes can be queried from a Ceramic node’s IPFS instance. There are trade-offs to be considered across all verifiable claim options, so we hope these ideas spark future creativity around new solutions.

Finally, if you’re interested in diving deeper into what can be done with DID sessions, check out this repository branch that shows how to allow users to generate VCs and EAS attestations using their session signature:

https://github.com/ceramicstudio/user-controlled-claims/tree/did-sessionNavigate to our forum if you have questions or want to contribute to our RFC on verifiable claims!